Is anyone monitoring the integrity of your data, or could it be tampered without anyone spotting it?

Is anyone verifying the authenticity of the data in the log, or are you blindly trusting it?

Are you logging enough to actually gain a benefit? Or too much and you risk releasing something sensitive?

For a system to be verifiable, you need to think more carefully about what you're logging and why. Who relies on the log? Who can verify its contents and how? What is actually necessary to log to achieve this?

Exercise #1

We’ve designed a series of questions to help you start thinking about the design of your verifiable system.

Each question has worked examples that you can learn from. By the end of the exercise, you should have a better idea of your design for your verifiable system.

You could work through these questions alone or with your team, a bit like you might when doing a threat model.

Exercise #2

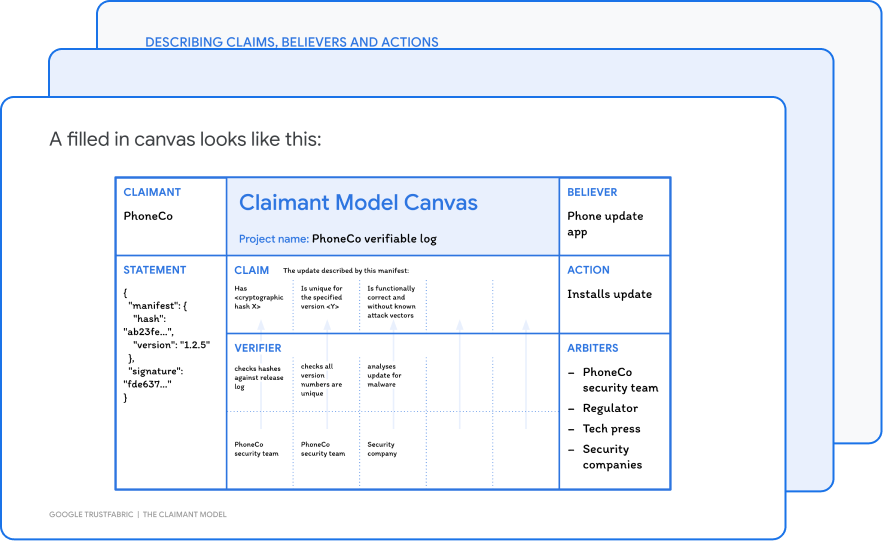

The Claimant Model is a framework you can use to define the roles and artifacts in your verifiable system.

The Claimant Model will help you get precise about the design of your verifiable system. This guide takes you step-by-step through how to map the Claimant Model for binary transparency.

The theory behind the Claimant Model is that Verifiable systems revolve around a central claim. A claim is something that’s trusted to be true in order to take a particular action.

For example, before installing a software update, a phone’s updater app trusts the claim “Software update v1.2 was really made by PhoneCo” made by the manufacturer, PhoneCo.

Without a verifiable system, the updater app blindly trusts the digital signature from PhoneCo. This does offer a level of protection. But it doesn’t offer any visibility of a malicious update issued to a targeted user in the event that their signing key was stolen.

The Claimant Model helps PhoneCo design transparency and discoverabilty into their system, adding a layer of protection beyond cryptographic signing. Now they can detect malicious updates and discover if their keys are compromised.

The Claimant Model gives you a framework for describing:

Below are two examples of applying the claimant model to Trillian based solutions:

| Claimant | Any Certificate Authority |

| Claim |

I, ${CertificateAuthority}, am authorized to issue

$cert for $domain

|

| Believer | Browser (user agent) |

| Verifier | Domain Owners |

| Action | Browser trusts certificate to encrypt communication with web server |

| Claimant | Proxy |

| Claim |

I, ${Proxy}, will return only $hash as the checksum for

$module at $version

|

| Believer | Go client-side tool |

| Verifier | Anyone checking that each module and version appears at most once |

| Action |

Go client-side tool trusts the code it’s downloaded for

$module at $version

|

Read more about the core claimant model with a mapped example for certificate transparency

A paper presenting a model for transparent ecosystems built around logs.

A story of how an engineer working on a download server uses The Claimant model to implement a transparent, verifiable-log.

Exercise #1

We’ve designed a series of questions to help you start thinking about the design of your verifiable system.

Exercise #2

The Claimant Model will help you get precise about the design of your verifiable system.